A Note From the Author

As a systems thinker, I weave together solutions from across disciplines to tackle the biggest challenge of our time.

In 2022, the American Southwest stood on the brink of catastrophe. The Colorado River—lifeline to forty million people—fell to its lowest levels in recorded history. Reservoirs that once seemed unshakable, Lake Mead and Lake Powell, approached “dead pool,” the point at which water sinks so low that dams can no longer generate electricity. Farmers cut plantings, hydro turbines slowed, and cities faced the unthinkable prospect of running dry.

That same summer, Europe endured its worst drought in five centuries. The Rhine River, once a roaring artery of commerce, became a trickle. Barges carrying coal, grain, and chemicals ran aground on exposed riverbeds. Energy supplies faltered, factories idled, and the continent’s industrial heartland was reminded that even modern economies remain tethered to the rhythms of water.

In the Horn of Africa, the consequences were even more devastating. Five consecutive rainy seasons failed between 2020 and 2023, leaving more than thirty million people in Ethiopia, Kenya, and Somalia in acute food insecurity. Crops withered, livestock died, and entire communities faced the specter of famine. Climate change, once an abstraction, was visible in the empty fields and hollow eyes of children.

Water scarcity impacts 40% of the world’s population, and as many as 700 million people are at-risk of being displaced as a result of drought by 2030.

- World Health Organization (2019)

In 2023, Uruguay’s wealthy capital of Montevideo found its taps running with brackish, salty water as reservoirs dried to mud. For months, millions of residents in and around the capital city had no access to clean drinking water. Grocery store shelves were stripped bare of bottled supplies. A modern city, accustomed to abundance, discovered how quickly climate change can erase certainty.

While the world reeled from drought on nearly every continent, a technological revolution was quietly consuming water in astonishing volumes. On November 30, 2022, ChatGPT exploded into the public consciousness. Within two months it reached 100 million users—the fastest adoption of any consumer technology in history. What began as a curiosity—an AI that could write essays, code, and poems—quickly became a ubiquitous everyday tool.

Few paused to ask what powered this miracle. Each query sent to an AI model requires vast computation, and with it, vast amounts of electricity and cooling. Depending on the model’s efficiency and the infrastructure of the data center, the water footprint of a single interaction can range from just 0.26 milliliters to as much as 519 milliliters—roughly a small bottle of water to generate a 100-word email. Multiply that by billions of daily requests, and the hidden cost becomes staggering.

A 1 megawatt (MW) data centre can use up to 25.5 million litres of water annually just for cooling – equivalent to the daily water consumption of approximately 300,000 people.

- World Economic Forum (2024)

It was a jarring paradox. As rivers and reservoirs ran dry, the digital age poured water into sprawling data centers, cooling the powerful computer chips that drive AI just to keep them from overheating. Artificial intelligence arrived as both a dazzling breakthrough and an invisible drain on already scarce resources.

This is the double-edged nature of AI. It could accelerate climate collapse through unchecked growth, or—if guided with intention—become one of the most powerful tools humanity has to adapt and fight back. In a world running short on time, we cannot afford to ignore either possibility.

Artificial intelligence doesn’t exist in the cloud—at least not in the airy, weightless way the word suggests. Behind every AI query lies a sprawling network of data centers, warehouses packed with racks of high-performance chips humming around the clock, fed by electricity and cooled by water. The footprint of this infrastructure is far larger than most people realize.

Training a frontier model—the kind capable of writing essays, generating images, or simulating climate patterns—requires staggering amounts of energy. Each new generation demands more computing power than the last, and the scale is accelerating fast. According to new projections from the Lawrence Berkeley National Laboratory, by 2028 more than half of all electricity used by data centers will go toward AI. At that point, AI alone could consume as much power every year as nearly a quarter of all U.S. households.

OpenAI aims to spend $500 billion—more than the Apollo space program—on the Stargate initiative where they will build as many as 10 data centers (each of which could require five gigawatts, more than the total power demand from the state of New Hampshire).

- MIT Technology Review (2025)

The emissions don’t stop once a model is trained. To stay online, these systems rely on vast fleets of servers running day and night to handle billions of requests. This process, known as inference, now accounts for most of AI’s ongoing footprint. Running a single query through a large language model can use several times more electricity than a typical Google search. At global scale, that difference is enormous—billions of prompts compounded daily into gigawatt-hours of new demand on already strained power grids, much of it still fueled by carbon.

The environmental impact doesn’t stop with electricity. Data centers must also remain cool to prevent chips from overheating. Traditionally this has meant being cooled with vast amounts of water—pumped, circulated, and evaporated to draw heat away from processors. In Iowa, a Microsoft facility consumed an estimated 1.7 billion gallons of freshwater from 2021 to 2022—a 34 percent jump, much of it tied to AI systems. Each chatbot response, image generation, or voice query becomes a hidden sip of the world’s most essential resource.

A human adult needs about two to three liters of water a day to survive, yet more than a billion people don’t have access to clean drinking water. Nearly three billion live with scarcity for at least part of the year, and millions still die from diseases caused by unsafe water. Set against that reality, the billions of gallons funneled into cooling AI servers take on a different weight.

Water withdrawal of global AI is projected to reach 4.2 to 6.6 billion cubic meters in 2027, which is more than the total annual water withdrawal of half of the U.K.

- Communications of the ACM, “Making AI Less ‘Thirsty’” (2025)

The consequences ripple outward. In areas where AI servers cluster—from the American Midwest to Northern Europe and parts of Asia—local utilities face new pressures to balance water rights, agriculture, and industry. Communities living near these facilities often bear the costs while global tech companies reap the rewards. The manufacturing pipeline adds to the strain as well, since the advanced chips that power AI depend on rare minerals, vast supply chains, and factories with heavy energy demands.

The result is sobering. AI’s meteoric rise has added an invisible, resource-hungry infrastructure to a world already straining under its climate footprint. Growth is so rapid it risks outpacing any chance of sustainability planning. The faster it accelerates, the harder it becomes to rein in emissions, water withdrawals, and material demands.

AI’s appetite for energy and water is real—but it doesn’t have to remain unchecked. Just as we learned to regulate factories and transportation, the infrastructure behind AI can be reshaped. Companies are already experimenting with carbon-aware scheduling, training large models when renewable power is abundant on the grid. Others are redesigning facilities to cut their dependence on freshwater. In China, new coastal data centers are being built to use seawater for cooling, sparing inland communities the strain. Microsoft has even tested underwater server pods, cooled naturally by ocean currents.

Energy is the other half of the equation. Powering fleets of high-performance processors with fossil fuels only deepens the crisis, so big tech is searching for alternatives. Some firms are locking in massive clean-power purchases—entire wind and solar farms built to feed their servers. Others are going further. Google has moved into the nuclear space, exploring small modular reactors (SMRs) to provide steady, carbon-free power directly to AI data centers, removing the need for coal or gas backup. Whether these ventures succeed at scale remains to be seen, but the message is clear. If AI is to expand, it must do so on a cleaner, more sustainable foundation.

These efforts are only a beginning, but they prove something important—AI’s footprint isn’t fixed. With the right choices, it can grow without overwhelming grids or draining rivers. But if we fail to make those choices, the same technology that could help us solve the climate crisis will end up accelerating it. Every algorithm we train and every data center we build will either move us closer to sustainability—or further away from it.

As we bring the footprint under control, AI’s benefits come into sharper view. The same algorithms that demand vast resources can also be directed toward solutions. Fed the right data, they can sift through complexity at a scale no human team could manage, spotting inefficiencies, predicting risks, and testing ideas before they are built in the real world. Some of the most compelling applications are already underway—and they’re not theoretical. They’re saving lives and cutting emissions today.

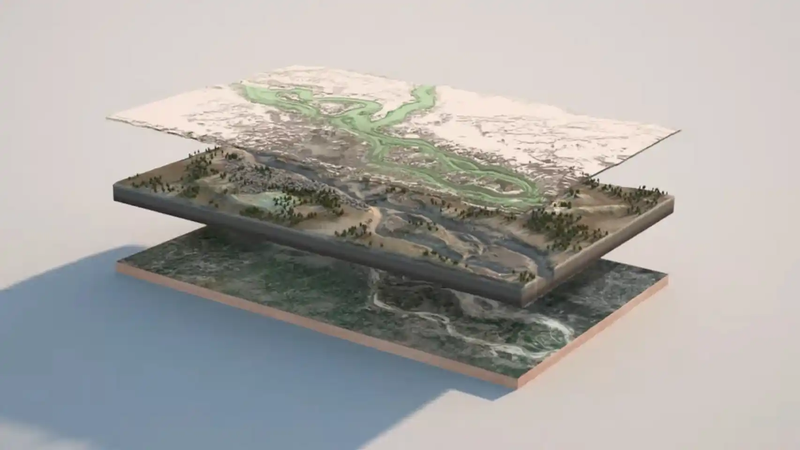

One of the most powerful examples is in disaster prediction. In 2021, Google’s AI-driven flood forecasting system expanded across parts of South Asia and Africa, sending alerts to more than 23 million people. Trained on decades of hydrological data, it can now predict not only whether a river will flood, but how deep the water will get and where it will spread. In parts of India and Bangladesh, that means communities can stage supplies, move livestock, and evacuate days earlier—turning vague warnings into actionable plans that save lives.

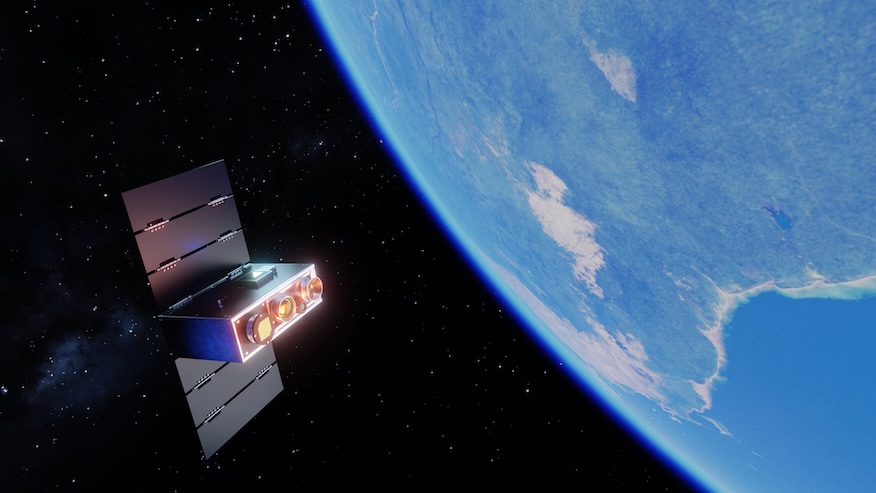

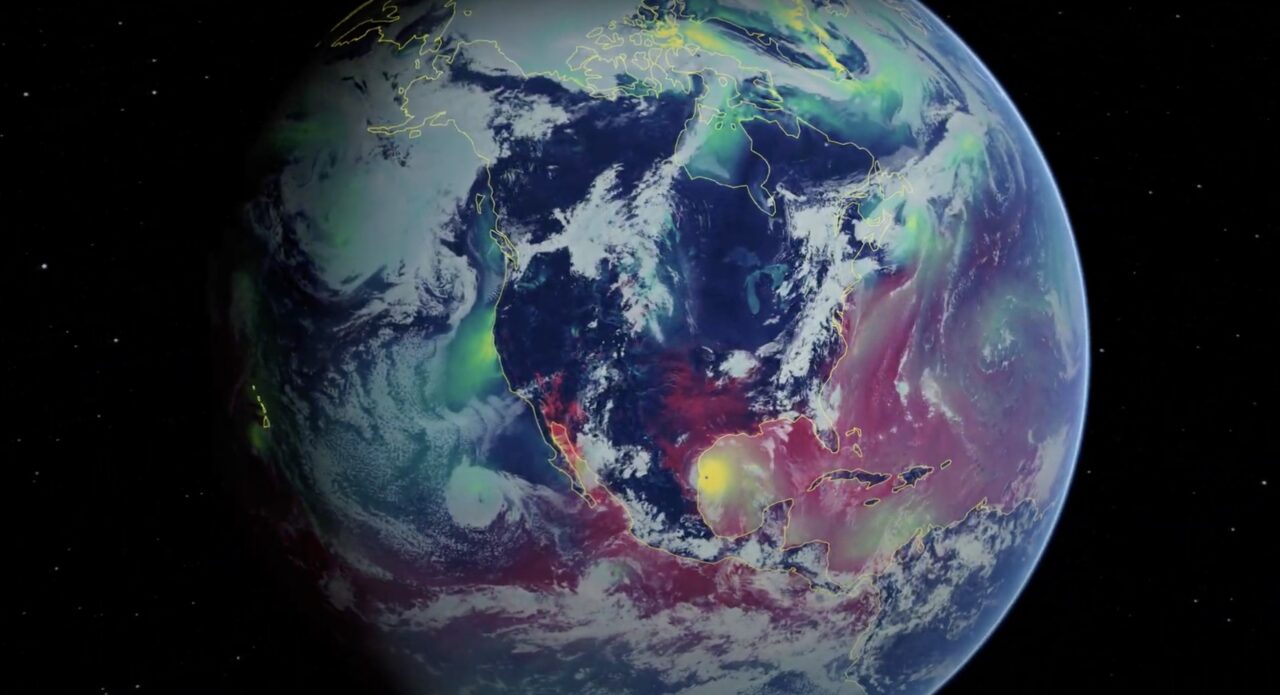

AI is also transforming our ability to forecast and respond to climate extremes. New models can simulate weather systems with unprecedented speed and accuracy. Platforms like NVIDIA’s Earth-2 are pushing toward real-time “digital twins” of the planet—virtual models that can test scenarios and anticipate disasters hours or even days earlier. These advances won’t prevent floods or fires outright, but they can make response faster, smarter, and far more effective.

AI is emerging as a transformative general-purpose technology in scientific research that can unearth discoveries that would have otherwise remained hidden. AI has the potential to transform every scientific discipline.

- World Economic Forum, “Top 10 Emerging Technologies of 2024”

Wildfires are another frontier. By combining satellite data, weather forecasts, and terrain maps, AI can now identify when and where conditions are ripe for ignition—sometimes down to a few hundred square meters and a single day’s forecast. A university team recently built a system that predicts lightning-caused fires with over 90 percent accuracy. On the ground, startups like Pano AI are deploying camera networks and image-recognition software that spot smoke the moment it appears, giving firefighters precious lead time. In the sky, companies like OroraTech are using thermal-imaging satellites to detect fire hotspots in near real time—tracking vast forest regions that once burned unnoticed for hours.

AI is also helping us see what was once invisible. Montreal-based GHGSat operates satellites that use AI and hyperspectral imaging to pinpoint methane leaks from oil and gas operations, turning atmospheric data into actionable repair maps. The Canadian Space Agency is testing satellite-based AI systems to detect methane offshore, while other networks now monitor illegal fishing fleets and track deforestation across the tropics. These are the same capabilities that once belonged only to governments and militaries—now harnessed for environmental protection.

As AI continues to develop, it will play a crucial role in the formulation of global food security and sustainability in addressing population growth demands in an environmentally sensitive manner.

- Journal of Agriculture and Food Research, Volume 20 (2025)

AI’s reach even extends to food security. In agriculture, algorithms are guiding irrigation, identifying crop diseases, and reducing waste in ways that conserve both water and emissions. Systems like CropX use soil data and weather modeling to optimize irrigation, while Plantix helps farmers diagnose plant disease with a single photo. Together, these tools offer farmers a level of precision once unimaginable—helping them adapt to drought, protect yields, and reduce fertilizer use in an increasingly volatile climate.

But AI isn’t just helping us anticipate threats—it’s also accelerating the search for solutions. In the lab, algorithms are helping researchers develop new battery chemistries, advanced materials, and more efficient solar cells in a fraction of the time traditional methods would take. Breakthroughs that once might have taken decades of trial and error can now be identified in months, compressing the timeline for clean-technology development.

In all of these areas, the technology is not replacing human expertise—it’s extending it. Scientists, engineers, and policymakers still make the decisions. What AI offers is speed, scale, and the ability to navigate complexity—qualities that could prove essential in a crisis that demands all three. The tools we build today will decide whether AI accelerates the problem or becomes a cornerstone of the solution.

The promise is real. But so are the risks. If AI is to play a role in fighting climate change, we have to confront its darker side. The risks fall into three broad categories—economic, cultural, and existential. Each has the potential not just to slow progress, but to fracture the trust and stability we need to act at all.

The first risk is economic. AI could hollow out entire sectors of work—from call centers to accounting to design and film studios. For many, it won’t just be an assistant, it will be a replacement. Jobs that once supported middle-class families could vanish, while the tools to replace them are owned by a handful of corporations and billionaires.

The impact would go beyond paychecks. Work is about dignity and purpose as much as income. If millions lose their livelihoods, the damage won’t just show up on balance sheets—it will show up in communities stripped of stability, in families under strain, and in the growing anger of people who feel discarded. We’ve already seen glimpses of this with automation in manufacturing and retail. AI threatens to take that disruption into white-collar professions once thought untouchable.

People worried about rent or food don’t have the luxury of worrying about carbon emissions. Social upheaval makes climate action far more difficult. When day-to-day survival is the priority, saving the environment falls by the wayside.

Since the widespread adoption of generative AI, early-career workers (ages 22–25) in the most AI-exposed occupations have experienced a 13 percent decline in employment.

- Stanford University, Digital Economy Lab (2025)

From there, the consequences could spiral. If the wealth gap keeps widening, the middle class could be gutted, leaving fewer people able to afford what the wealthy are selling. That isn’t just unjust, it’s unsustainable. History shows what happens when inequality stretches too far—societies fracture, trust erodes, and entire economies collapse. The wealthy may profit in the short term, but by hollowing out the middle they erode their own future, cutting away the very base that sustains them.

The irony is that the very tools the super-rich are eager to exploit carry the seeds of their own undoing. For decades, it has taken vast sums of money and sprawling institutions to make a blockbuster film, launch a video game studio, or produce an album. But with AI, an individual could one day create an entire feature-length movie from a single laptop—complete with AI-generated actors, cinematography, sound and music—without ever needing a Hollywood budget or a corporate backer.

The same technology that threatens to wipe out creative jobs could also strip power from those who bankroll them. By racing to replace human labor with machines, the rich may discover they’ve also replaced the very industries that built their empires. In chasing short-term gains, they risk dismantling the long-term structures that sustain their wealth.

Generative AI will enable access to a superhuman breadth of knowledge to develop new tools of disruption and conflict, from malware to biological weapons.

- World Economic Forum, Global Risks Report (2024)

The second risk is cultural. AI is trained on the work of countless writers, artists, filmmakers, and musicians—without permission or compensation. Now it can generate paintings, scripts, and even video games on command. What once took years of practice and imagination can be mimicked in seconds.

The danger isn’t only economic, though the loss of creative jobs is devastating. It undermines the human experience itself. Art was never meant to be efficient — it’s how we tell stories, wrestle with meaning, and make life worth living. AI was supposed to take over the tedious work so we’d have more time for creativity. Instead, it threatens to automate and cheaply commodify the very things we love, leaving us with more drudgery and less joy. If originality can be copied and repackaged endlessly, the culture that binds us together begins to erode.

But the greater threat goes beyond art. The same ability to generate words and images can just as easily be turned toward propaganda. Outrage already drives engagement on social media, and the algorithms reward whatever keeps us hooked. AI can flood those channels with synthetic voices, fake images, and articles tuned to our biases—each one pushing us further apart.

We’re already in a culture war where disinformation is weaponized to divide us—left against right, neighbor against neighbor—while the real divide goes unnoticed: up versus down, rich versus poor. AI risks taking that playbook and supercharging it, flooding the public square with convincing falsehoods until the line between truth and fiction disappears.

And that’s the real danger. When truth itself feels negotiable, trust collapses. And once trust is gone, collective action collapses with it. Why fight for the climate—or anything else—if you can’t even agree on what’s real? A society that can’t tell fact from fiction won’t solve climate change. It won’t solve anything.

Given the speed and scale at which they are capable of operating, autonomous weapons systems introduce the risk of accidental and rapid conflict escalation.

- RAND Research Report (2020), via AutonomousWeapons.org

The third risk is existential. AI is already creeping into decision-making once reserved for humans—who gets hired, who receives a loan, even who lives or dies on the battlefield. Algorithms trained to maximize efficiency can become weapons, stripping away accountability while amplifying destruction.

The idea of machines making choices in war should chill us. Yet militaries around the world are moving in that direction, experimenting with drones and autonomous systems guided by AI. In a split second, an algorithm could determine that one group of people lives and another dies—with no human deliberation, no room for mercy, no responsibility if it gets it wrong.

And that may be the deepest danger. When AI makes life-and-death decisions, responsibility evaporates. Who do we hold accountable for an attack that killed innocents—the commander, the coder, or the machine itself? Humans can at least be judged for their choices. Algorithms cannot.

What’s emerging is a new arms race. In the last century, it was nuclear stockpiles. Now it’s AI weapons—drone swarms, autonomous targeting systems, algorithms built to strike faster than any human could. Artificial pilots aren’t limited by the crushing forces that strain human bodies in jet fighters. They can maneuver in ways that would kill a human pilot, giving them an enormous advantage in air-to-air combat.

The first nation to achieve dominance will hold a massive tactical and strategic edge—and every military leader around the world knows it. The danger isn’t only that machines might make catastrophic mistakes. It’s that the race itself makes those mistakes inevitable. By outsourcing morality to software, we risk normalizing decisions without conscience. A society that allows machines to make its hardest choices risks losing more than control—it risks losing what makes us human.

Concentrated power isn’t just a problem for markets. Relying on a few unaccountable corporate actors for core infrastructure is a problem for democracy, culture, and individual and collective agency.

- The AI Now Institute, via MIT Technology Review (2023)

Threaded through all of these risks is power. Who builds AI, who controls it, and who it serves will decide whether it accelerates solutions or deepens the crisis. Economic disruption could hollow out the middle class. Cultural risks could undermine trust in truth and fracture the shared reality we need to act. Existential risks could strip away accountability and humanity itself.

AI is not inherently good or bad—it reflects the values we embed in it. If guided with care, it could become one of the most powerful tools humanity has ever had. If left unchecked, it could gut our economies, erode our humanity, and poison the very culture that binds us together. The stakes are that high, and the window to set the rules is closing fast. But rules alone won’t save us. What matters is who enforces them—and who fights back when they’re ignored.

We are already in an information war. State-backed troll farms and coordinated bot networks have been used as tools of influence and division for years—most famously the Kremlin’s Internet Research Agency, which ran large-scale campaigns to polarize public opinion and spread falsehoods. We can’t pretend those tools don’t exist or that they operate only in the shadows. They are deliberate, documented, and effective.

Climate denial and delay are prime targets. Disinformation campaigns—funded by vested interests and amplified by automated networks—have been effective at sowing doubt, slowing policy, and eroding public trust in science. When people can’t agree on basic facts, the political space for serious action disappears. That is exactly what these campaigns are designed to do.

We need to stop pretending that the rule of law will keep us safe. Bad actors don’t care about laws. They operate across borders, shielded by governments that reward or ignore their behavior. Russia, China, Iran, and others are already using armies of bots and fake accounts to distort public opinion, destabilize democracies, and flood social media with disinformation. They exploit the openness of our systems because they know we’ll hesitate to fight back.

You can’t stop criminals by passing more laws—and that’s especially true when the offenders are governments themselves. You can’t prosecute them. You can’t send in the police. They operate online with lawless impunity. The only way to stop them is to fight back—and not with one hand tied behind your back. If we keep playing by the rules while they wage information warfare to divide us, we will lose again and again. The only way to win is to take the fight to them and use the same tools they use, but do it with integrity, transparency, and purpose.

That means flipping the script and using AI to fight back.

Major fossil fuel companies knew for decades that their products would drive climate change and that curbing emissions would threaten profits. Rather than act, they funded a long-running disinformation campaign to delay action and mislead the public.

- Union of Concerned Scientists, via “Decades of Deceit” Report (2025)

Here’s the core of the idea. Build a public-interest network of AI agents—not corporate, not anonymous, but accountable. They would be transparent, clearly labeled, and open to audit. Their job would be simple. When a false or misleading claim spreads on social media, they respond quickly and calmly with clear, sourced information. The intent would be to invite conversation. No shaming. No censorship. No spam. Just facts—drawn from verified science, international climate research, and independent studies. Over time, thousands—if not millions—of small, civil corrections done at scale can change public discourse. Not to silence anyone, but to make the truth louder.

Every false claim, every lie, every attempt to mislead—whether through social media or television—could be challenged in real time. And not by a human team working around the clock, but by machines that never sleep, never tire, and never take the bait. These AI systems could reply across languages and platforms, calmly dismantling disinformation wherever it appears.

Trust would be essential. These systems couldn’t be owned by a single government or corporation. The most viable model would be a multinational public-interest organization—something with the credibility and reach of the UN, but with independent, non-governmental oversight through universities, scientific bodies, and NGOs. It could be funded through an international monetary fund, structured much like UNICEF or the Intergovernmental Panel on Climate Change (IPCC), but dedicated to protecting truth itself. Every post logged. Every source verifiable. Every system open to public inspection. People wouldn’t have to take its word for it—they could see for themselves.

Evidence-based dialogues with generative AI reduced conspiracy beliefs by about 20%—with effects that persisted for months and even weakened belief in unrelated false narratives.

- Thomas H. Costello et al., Science.org Vol 385, Issue 6714 (2024)

Technically, this isn’t hard to achieve. A dual-system approach could help limit the impact of AI hallucinations. One layer would focus on outward dialogue—the chatbot itself. The other would work behind the scenes, fact-checking every response before it’s posted. Each reply would be cross-checked against trusted databases and primary research. If no credible source exists, the AI would simply say so. No bluffing, no spin—just transparency. Over time, the consistency of that honesty would build credibility.

Yes, there are risks. Generative AI can make mistakes, and bad actors will try to exploit or impersonate these systems. But those challenges can be mitigated with transparency, auditability, and public accountability. Every response should disclose that it’s AI-generated, list its sources, and explain its confidence level. Mistakes will happen, but they’ll be visible, correctable, and open to scrutiny.

This idea has already proven effective at smaller scales. The United Nations and several governments have begun developing initiatives to counter climate misinformation. Researchers have shown that brief dialogues with conversational AI systems—lasting less than 10 minutes on average—can reduce belief in conspiracy theories by roughly twenty percent and strengthen public understanding of verified facts, with effects that persist for months and even extend to unrelated false claims. The proof of concept already exists. What’s missing is scale—and the collective will to use it.

Russia, China, Iran, Venezuela, and others collaborate around one big narrative—the idea that autocracy is stable and safe, and democracy, especially American democracy, is used and divided.

- Anne Applebaum, Senior Fellow at SNF Agora Institute, via John Hopkins University (2024)

We also have to face the geopolitical reality. Many of the worst offenders are backed by states, and law enforcement alone can’t stop them. Defensive AI systems will need to match the sophistication of their adversaries—detecting coordinated bot networks, countering disinformation campaigns, and working with independent researchers to expose when those efforts are organized or state-backed.

Ideally, this would become an international effort, but it doesn’t have to start that way. A single nation—or even a coalition of willing partners—could lead by example, proving that shared truth and factual integrity are worth defending. Over time, shared data standards, open auditing, and cross-border collaboration would make it harder for bad actors to hide—and harder for anyone to dismiss these systems as propaganda.

It’s a bold idea, and it should be. Criminal networks and hostile states have used automation to gain power and shape public opinion for years. If defenders refuse to use the same tools, they’re surrendering the field. But boldness without integrity is dangerous. So the goal isn’t to fight fire with fire—by spreading our own disinformation—but to fight lies with truth. These AI systems must be transparent, accountable, and civil. They should correct misinformation, cite credible sources, and respond with clarity instead of hostility. They should be built like public institutions—funded openly, audited independently, and trusted because they’ve earned it. In short, they should be the calm, persistent voice of reason that the internet so badly needs.

If we can build that, we can begin to repair what’s been broken. We can bridge the divide between the political left and right, rebuild a sense of shared reality, and make truth visible again. Climate change propaganda would no longer go unchallenged. Lies designed to divide and delay would be countered in real time by facts that can’t be shouted down. And once truth can stand on its own again, we can finally focus on what matters—acting together to fix what’s still within our power to change.

AI is the most disruptive technology since the dawn of the industrial revolution. How it will shape our world remains to be seen, but its impact is undeniable. It will serve as a mirror to the human race, and what’s reflected back will depend on us. It can amplify our darkest impulses—greed, fear, indifference—or it can become one of humanity’s greatest allies. The reality will likely fall somewhere in between. But if we are to avoid the most destructive outcomes, we must act with intention, humility, and urgency.

The world doesn’t need more power in the hands of the few—it needs wiser hands to hold it. That’s what this moment demands of us. AI will not save us from ourselves. It can help us see patterns, reveal possibilities, and test solutions—but it cannot give us purpose, meaning, or the will to act. That has to come from us. What we choose to build, and why, will decide whether this technology deepens the crisis or helps us rise above it.

Every great turning point in human history has carried this same tension. Fire could warm or destroy. The atom could light cities or destroy them. AI is no different—it’s a force multiplier for whatever values we choose to amplify. If we chase convenience and profit above all else, we will automate our own decline. But if we use it to confront our greatest challenges, to heal what’s broken, and to build a more sustainable world, it could become the catalyst for a new era of progress.

The decades ahead will test more than our ingenuity—they will test our character. The story of AI and the story of climate change are, in the end, the same story: a question of whether humanity can wield its own power responsibly. Our machines are becoming more capable than ever. The real question is whether we can guide them with wisdom and purpose—and prove ourselves worthy stewards of the world we’ve been given.

Curious about why I wrote this book? Read my Author’s Note →

Want to dive deeper? A full list of sources and further reading for this chapter is available at: www.themundi.com/book/sources

Help shape the future! Sign up to receive behind-the-scenes updates, share your ideas, and influence the direction of my upcoming book on climate change.